I’ve been reading an absolutely fascinating book about how the brain works.1 Lisa Feldman Barrett’s “How Emotions Are Made: The Secret Life of the Brain” was published in 2017, so I am definitely late to the party, but this topic is gaining new relevance with the release of Gen AI tools geared towards recognizing and interpreting emotions in voice and face (e.g., Hume and GPT-4o).

The book sums up years of research that marks a paradigm shift. The main premise is that emotions aren’t hardwired; they don’t just happen to you. Emotions are not built into your brain. Emotions are guesses that your brain constructs in the moment. Emotions are simulations.

In fact, we simulate emotional instances ourselves (so quickly it’s utterly unnoticeable). This simulation is based on several factors: your physiological status, the context of the situation, and your ability to differentiate and label emotions. (Some people have a rather limited ability to do that; we all have a friend or two..)

According to Feldman Barrett’s research, emotions aren’t in our face and body (or brain), and so there is no one tone, facial expression or physiological “fingerprint” for any one emotion. She argues that, contrary to the traditional view, that emotions are not innate and universally recognizable, but are in fact socially constructed concepts. Variance, not conformity, is the rule when it comes to emotional expression. As we grow, we learn to categorize certain tones, facial expressions and behaviors into accepted emotional concepts, such as “anger”, “joy”, “boredom”, “fear”, and so on.

If emotions (and their expession) are not hardwired and universal attributes of humans, can we teach our machines to read us? Can machines detect emotion by reading facial expressions?

Well, only to some extent. In real life, people move their faces in a variety of ways to express a given emotion, depending on the context. There is no one-to-one mapping between a facial expression and an emotion.

So, what kind of training data is used to train algorithms for facial expression recognition? It would be based on emotional stereotypes, where each facial expression is rigidly labeled with a specific emotion. This approach can fail to capture the nuanced ways emotions are actually conveyed.

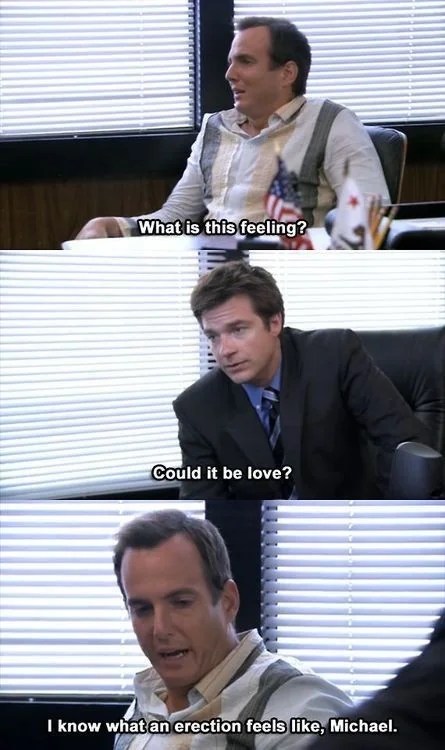

At the same time, there are exciting new developemnts in the machine vs. emotion domain. Take today’s OpenAI demo. At one point, an OpenAI employee Barrett Zoph asks the system to detect his emotion:

In a raspy, Scarlett Johansson-y voice, the model replies: “it looks like you’re feeling pretty happy and cheerful..[] whatever’s going on, it seems you’re in great mood“.

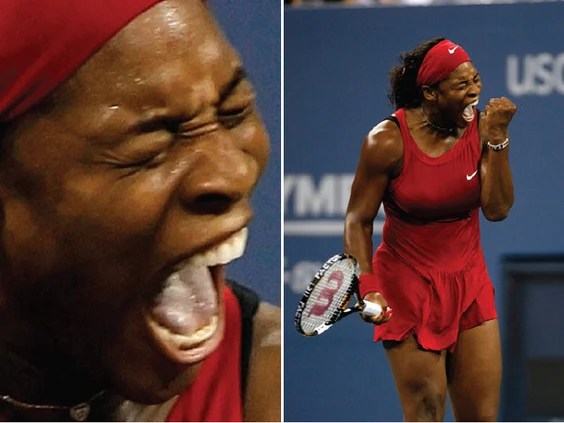

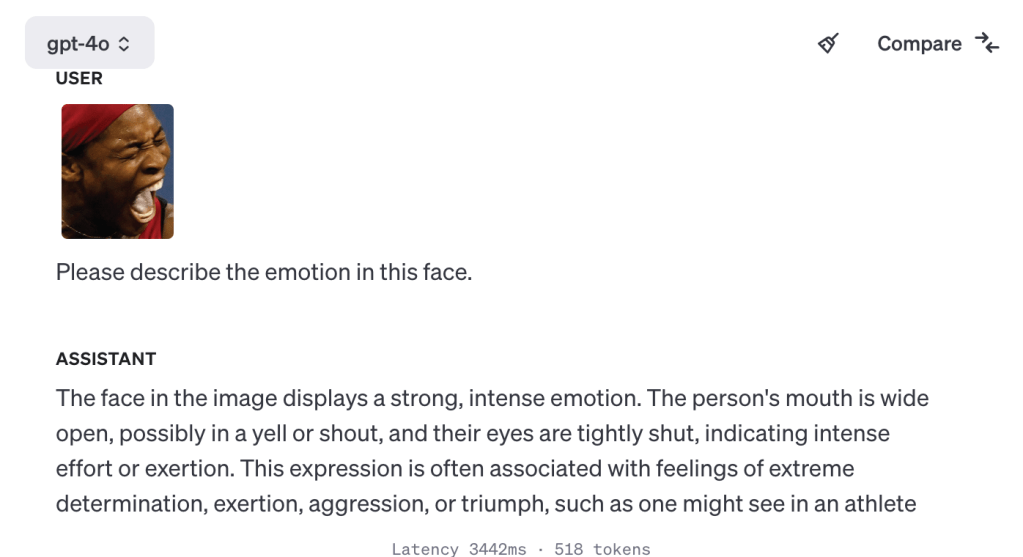

Even though happiness is considered to be the easiest emotion to detect (and one that comes closest to being universal), today’s demo of GPT-4o was pretty impression, if not eery. It even nails the Serena Williams face test:

We might never know what’s under the hood of GPT-4o, but.. what can I say? It’s Her world now.

Leave a comment